5. mOS Code Examples¶

We illustrate how mOS APIs can be used to program packet and flow monitoring and manipulation codes for network middleboxes. Before reading the code examples below, we suggest the readers to go through the mOS Programming API section first.

Note

Please note that we focus on explaining mOS monitoring socket APIs only here, but not mOS end socket APIs. Our mOS end socket APIs are inherited from mTCP APIs, and follow almost the same semantics of Berkeley Socket APIs. Please refer to mTCP paper for more details.

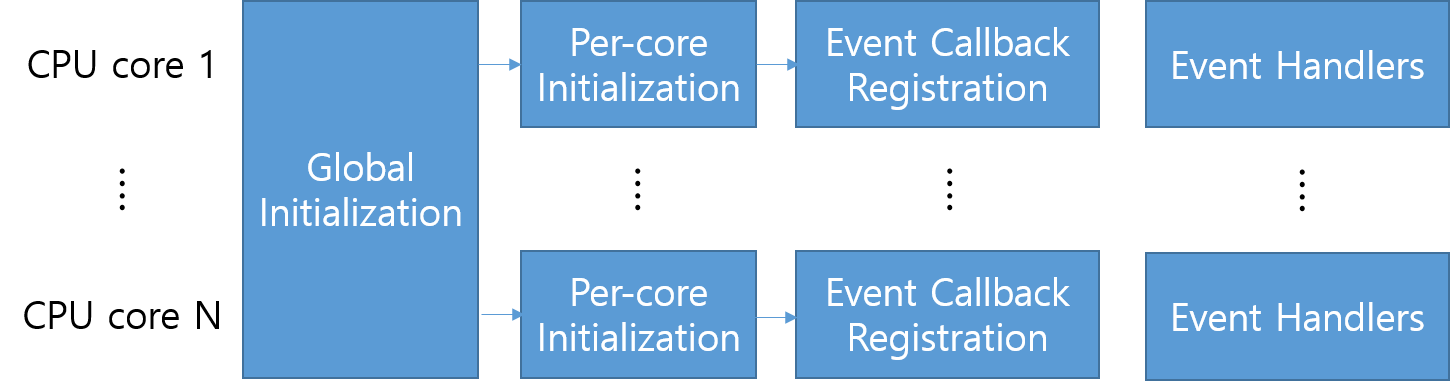

We first show how typical mOS applications look, and explain the overall workflow of mOS applications. As shown below, typical mOS applications can be broken into four sections.

Figure 5.1. mOS application overall workflow

A mOS application first should initialize mOS global internal data structures for them (Section 5.1). Next, mOS applications should spawn mOS threads, which run in parallel at each CPU core, and initialize per-thread internal data structures (Section 5.2). Once mOS threads are spawned, the mOS application can register handler (or callback) functions for flow events. The mOS threads will monitor flow behaviors and raise the events when the flow behaviors meet the event condition (Section 5.3). Once event callback function is triggered, the mOS applications can monitor the flow and perform the desired actions of the middleboxes (Section 5.4 and Section 5.5).

5.1. Global Initialization Routine¶

/* path to the default mos config file */

const char *config_path = "config/mos.conf";

/* initializing the mOS networking stack */

if (mtcp_init(config_path) < 0) {

fprintf(stderr, "Failed to initialize mOS networking stack.\n");

exit(-1);

}

/* CPU core hard limit set by application */

#define MAX_CORES 32;

/* a variable to hold mOS config information */

struct mtcp_conf g_mcfg;

/* override the number of cores to be used in mOS */

mtcp_getconf(&g_mcfg);

if (g_mcfg.num_cores > MAX_CORES)

g_mcfg.num_cores = MAX_CORES;

mtcp_setconf(&g_mcfg);

5.2. Per-core Initialization Routine¶

int i;

mctx_t g_mctx[MAX_CORES];

for (i = 0; i < g_mcfg.num_cores; i++) {

/* run mOS threads on each CPU core */

if (!(g_mctx[i] = mtcp_create_context(i))) {

fprintf(stderr, "Failed to craete mtcp context.\n");

exit(-1);

}

/* create socket and set it as nonblocking */

if ((sock = mtcp_socket(mctx, AF_INET, MOS_SOCK_MONITOR_STREAM, 0)) < 0) {

fprintf(stderr, "Failed to create monitor listening socket!\n");

exit(-1);

}

/* bind monitor with connection filter. NULL filter string means anything */

if (mtcp_bind_monitor_filter(mctx, sock, NULL) < 0) {

fprintf(stderr, "Failed to bind to the listening socket!\n");

exit(-1);

}

}

5.3. Event Callback Registration¶

/* register callback */

if (mtcp_register_callback(ctx->mctx, ctx->mon_listener,

MOS_ON_CONN_NEW_DATA, MOS_HK_SND, ApplyActionPerFlow) == -1)

EXIT_WITH_ERROR("Failed to register callback function\n");

/* event for the initial SYN packet */

event_t initSYNEvent = mtcp_define_event(MOS_ON_PKT_IN, CatchInitSYN, NULL);

if (initSYNEvent == MOS_NULL_EVENT) {

fprintf(stderr, "mtcp_define_event() failed!");

exit(-1);

}

/* register callback */

if (mtcp_register_callback(ctx->mctx, ctx->mon_listener,

udeForSYN, MOS_HK_SND, ApplyActionPerFlow) == -1)

EXIT_WITH_ERROR("Failed to register callback func!\n");

/* CPU 0 is in charge of printing stats */

if (ctx->mctx->cpu == 0 &&

mtcp_settimer(ctx->mctx, ctx->mon_listener,

&tv_1sec, DumpFWRuleTable))

EXIT_WITH_ERROR("Failed to register timer callback func!\n");

5.4. Packet Processing Logic in Event Handlers¶

/* TBD */

5.5. Flow Processing Logic in Event Handlers¶

/* TBD */

5.6. Application Destroy Routine¶

for (i = 0; i < g_mcfg.num_cores; i++) {

/* wait for the TCP thread to finish */

mtcp_app_join(ctx->mctx);

/* close the monitoring socket */

mtcp_close(ctx->mctx, ctx->mon_listener);

/* tear down */

mtcp_destroy_context(ctx->mctx);

}

mtcp_destroy();