4. mOS Programming API¶

We provide man pages that describe mOS API. You can access those pages at: https://mos.kaist.edu/index_man.html. mOS socket function prototypes are split into two files: mos_api.h and mtcp_api.h. The reader is also suggested to go through the Sample Applications User Guide to see how the library can be used.

We discuss a few important mOS API functions in detail.

4.1. Initialization Routines¶

/**

* Initializes mOS base with parameters mentioned in config_file

*

* @param [in] config_file: path to the startup configuration file

* @return 0 on success, -1 on error

*/

int mtcp_init(const char *config_file);

Note

Make sure that you call mtcp_init() before launching the

mOS context (mtcp_create_context()).

/**

* Loads current mOS configuration in conf structure

*

* @param [in] conf

* @return 0 on success, -1 on error

*/

int mtcp_getconf(struct mtcp_conf *conf);

struct mtcp_conf holds configuration parameters of mOS.

/**

* Updates mOS base with parameters mentioned in conf structure

*

* @param [in] conf

* @return 0 on success, -1 on error

*/

int mtcp_setconf(struct mtcp_conf *conf);

Note

Make sure that you call mtcp_setconf() before launching the

mOS context (mtcp_create_context()).

/*

* Creates the mOS/mTCP thread based on the parameters passed by

* mtcp_init() & mtcp_setconf() functions

*

* @param [in] cpu: The CPU core ID where one wants to run the mOS thread.

* @return mctx_t on success, NULL on error.

*/

mctx_t mtcp_create_context(int cpu);

Important

Don’t call mtcp_create_context() more than once for the same CPU id.

/**

* Destroy the context that was created by mOS/mTCP thread

*

* @param [in] mctx_t: mtcp context

* @return 0 on success, -1 on error.

*/

int mtcp_destroy_context(mctx_t mctx);

Note

This is usually called once the mOS thread is exiting.

/**

* Destroy the global mOS context

*

* @return 0 on success, -1 on error

*/

int mtcp_destroy();

Note

This is usually called once as the main() function exits.

4.2. mOS End TCP Socket API¶

We focus more on the monitoring API and recommend the reader to refer to our mTCP paper for details on how to use the mTCP API.

Please see the following links to view commentary for each function:

4.3. mOS Monitoring Socket API¶

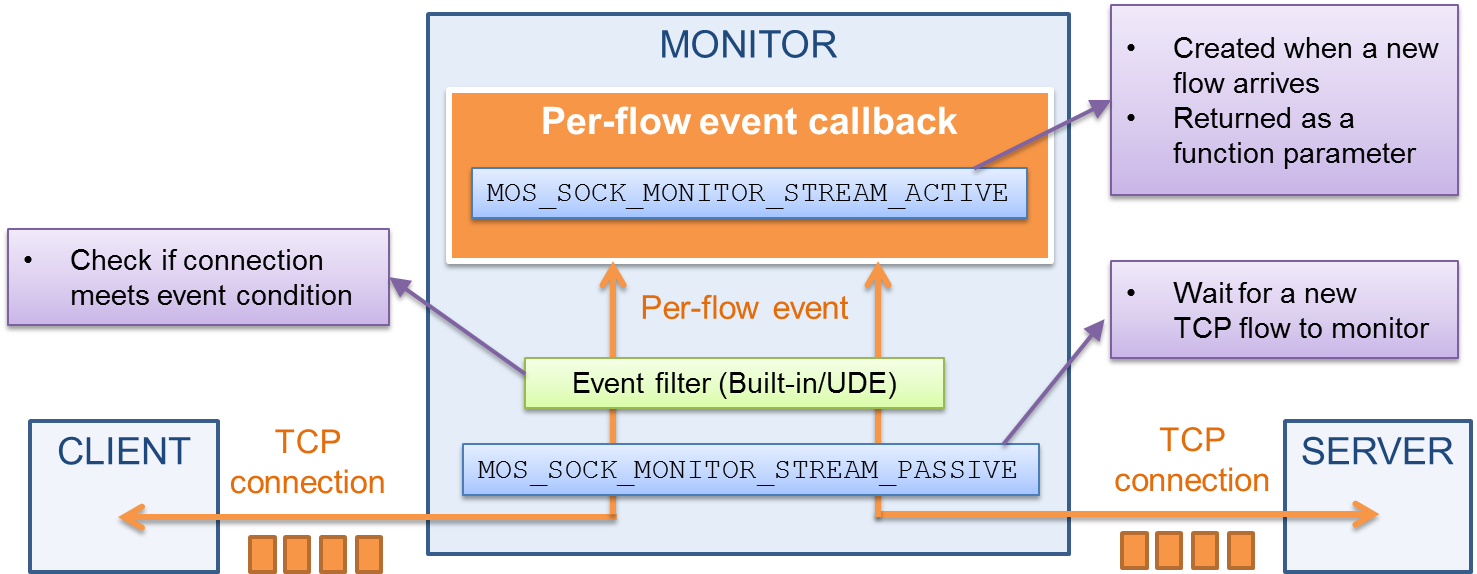

We next describe the operations and APIs with mOS monitoring socket. mOS callback APIs are

used for registering/unregistering callbacks to any built-in/user-defined events.

mtcp_register_callback() allows the users to register a callback function to all

the accepted flows from a listening socket, or to register/unregister a callback function

to/from a certain flow. The reader is recommended to go through this code snippet to

see how a mOS application can be initialized.

Please see the following links to view commentary for each function that is normally used in initializing the monitoring sockets:

We provide two types of monitoring sockets:

Stream monitoring sockets (

MOS_SOCK_MONITOR_STREAM) monitor traffic with complete flow semantics. They are capable of performing bytestream analysis and provide payload reassembly support. We use stream monitoring sockets in this example code.Raw monitoring sockets (

MOS_SOCK_MONITOR_RAW) monitor traffic without flow semantics. All packets (irrespective of L3/L4 protocol ID) can be captured using this socket.

We provide a wide range of functions that a mOS developer can use to monitor packet context, maneuver the corresponding flow attributes, and even access the flow’s bytestream.

4.3.1. Packet Information Monitoring API¶

Once inside the callback handler, we can use mtcp_getlastpkt() to retrieve

packet information corresponding to the flow in context.

/*

* Fetch the most recent packet of the specified side for a given flow.

*

* @param [in] mctx: mTCP/mOS context

* @param [in] sock: monitoring socket ID (represents flow in case of stream monitoring sockets)

* @param [in] side: One of MOS_SIDE_CLI or MOS_SIDE_SVR (MOS_NULL for MOS_SOCK_MONITOR_RAW socket)

* @param [in] p: point to pkt_info struct (only L2-L3 information is available for MOS_SOCK_MONITOR_RAW socket)

* @return 0 on success, -1 on failure

*/

int mtcp_getlastpkt(mctx_t mctx, int sock, int side, struct pkt_info *p);

The retrieved packet information is returned as struct pkt_info, and is read-only

by default. The followings are the member variables for the packet information

structure with their description:

struct pkt_info {

uint32_t cur_ts; /*< time stamp when the packet is received */

int eth_len; /*< length of the ethernet header */

int ip_len; /*< length of the IP header */

uint64_t offset; /*< TCP ring buffer offset */

uint16_t payloadlen; /*< length of the TCP payload */

uint32_t seq; /*< host-order sequence number */

uint32_t ack_seq; /*< host-order acknowledge number */

uint16_t window; /*< TCP window size */

struct ethhdr *ethh; /*< ethernet header */

struct iphdr *iph; /*< IP header */

struct tcphdr *tcph; /*< TCP header */

uint8_t *payload; /*< TCP payload */

}

Note

When you retrieve the packet information using mtcp_getlastpkt()

via MOS_SOCK_MONITOR_RAW socket, you can only use up to L3 information.

(cur_ts, eth_len, ip_len, ethh, iph)

Tip

Packet information might be impossible to retrieve from some callback

functions, because some built-in events are independent from packet

reception. For example, MOS_ON_CONN_END event is triggered when

a TCP flow at TIME_WAIT state destroys on timeout, and this event is

independent from any packet. For those cases, since there is no packet

triggered that event, mtcp_getlastpkt() call would return -1 without

any packet information.

Attention

A packet arriving in a mOS monitor gets stored as the flow’s last packet

on the sender side. This means that if an event is registered on

MOS_HK_RCV hook, then the user must access server flow’s last packet

if the client-side triggers the callback.

Note

For monitoring stream sockets, each connection stores a copy of the most recently observed packet (one from the client side, the other from the server). For raw sockets, only one copy of the packet is stored.

4.3.2. Packet Payload Modification API¶

If modification on a received packet before forwarding is required, it can be done

with mtcp_setlastpkt(). Using mtcp_setlastpkt() call inside a callback function,

you can modify the packet triggered by the callback event. The modifiable fields include

Ethernet header, IP/TCP header, IP/TCP checksum, and TCP payload. It is also possible

to drop the packet to avoid forwarding it (in inline configuration case) by putting

MOS_DROP to the option field.

Note

In inline configuration, the default policy for forwarding the packet is

determined by the forward parameter in the mtcp_setconf() function. An

mtcp_setlastpkt() call with MOS_DROP would override this policy.

Caution

You can only modify packet contents at MOS_HK_SND hook (i.e. modification

on MOS_HK_RCV is disallowed). This ensures that the simulated states of

the sender and receiver in a ongoing connection are consistent with the TCP

states of the actual endpoints.

/**

* Updates the Ethernet frame at a given offset across

* datalen bytes.

*

* @param [in] mctx: mtcp context

* @param [in] sock: monitoring socket

* @param [in] side: monitoring side

* (MOS_NULL for MOS_SOCK_MONITOR_RAW socket)

* @param [in] offset: the offset from where the data needs to be written

* @param [in] data: the data buffer that needs to be written

* @param [in] datalen: the length of data that needs to be written

* @param [in] option: disjunction of MOS_ETH_HDR, MOS_IP_HDR, MOS_TCP_HDR,

* MOS_TCP_PAYLOAD, MOS_DROP_PKT, MOS_UPDATE_TCP_CHKSUM,

* MOS_UPDATE_IP_CHKSUM

* @return Returns 0 on success, -1 on failure

*

*/

int

mtcp_setlastpkt(mctx_t mctx, int sock, int side, off_t offset,

byte *data, uint16_t datalen, int option);

4.3.3. TCP Flow Information Monitoring API¶

/**

* Get flow information

*

* @param [in] mctx: mTCP/mOS context

* @param [in] sock: monitoring socket id

* @param [in] level: SOL_MONSOCKET (for monitoring purposes)

* @param [in] optname: variable

* @param [in] optval: value of retrieved optname

* @param [in] optlen: size of optval

* @return 0 on success, -1 on error

*/

int mtcp_getsockopt(mctx_t mctx, int sock, int level, int optname, void *optval, socklen_t *optlen);

mtcp_getsockopt() helps retrieve flow information of a TCP flow. We

can use the following options for optname:

MOS_TCP_STATE_CLIorMOS_TCP_STATE_SVRreturns the current emulated state of the client or server. The optval argument is a pointer to an int whereas the optlen argument contains the sizeof(int). The optval returns a value of type enum tcpstate which can carry any one of the following states.

enum tcpstate {

TCP_CLOSED = 0,

TCP_LISTEN,

TCP_SYN_SENT,

TCP_SYN_RCVD,

TCP_ESTABLISHED,

TCP_FIN_WAIT_1,

TCP_FINE_WAIT_2,

TCP_CLOSE_WAIT,

TCP_CLOSING,

TCP_LAST_ACK,

TCP_TIME_WAIT

}

MOS_INFO_CLIBUFandMOS_INFO_SVRBUFreturns meta-data regarding the client’s or server’s TCP ring buffer. This information is returned in the form of optval which is passed as struct tcp_buf_info. The optlen value contains the sizeof(struct tcp_buf_info).

struct tcp_buf_info {

/* The initial TCP sequence number of TCP ring buffer. */

uint32_t tcpbi_init_seq;

/* TCP sequence number of the ’last byte of payload that has already

been read by the end application’ (applies in the case of embedded

monitor setup) */

uint32_t tcpbi_last_byte_read;

/* TCP sequence number of the ’last byte of the payload that is

currently buffered and needs to be read by the end application’

(applies in the case of embedded monitor setup). In case of standalone

monitors, tcpbi_last_byte_read = tcpbi_next_byte_expected */

uint32_t tcpbi_next_byte_expected;

/* TCP sequence number of the ’last byte of the payload that is

currently stored’ in the TCP ring buffer. This value may be

greater than tcpbi_next_byte_expected if packets arrive out of order. */

uint32_t tcpbi_last_byte_received;

}

MOS_FRAGINFO_CLIBUFandMOS_FRAGINFO_SVRBUFgives back offsets to fragments (non-contiguous data segments) currently stored in client’s or server’s TCP ring buffer. The optval is an array ofstruct tcp_ring_fragment.

struct tcp_ring_fragment {

uint64_t offset;

uint32_t len;

}

4.3.4. TCP Flow Manipulation API¶

In addition to packet modification API, mOS provides TCP flow manipulation APIs for TCP monitoring sockets.

/**

* Set flow information

*

* @param [in] mctx: mTCP/mOS context

* @param [in] sock: monitoring socket id

* @param [in] level: SOL_MONSOCKET (for monitoring purposes)

* @param [in] optname: variable

* @param [in] optval: value of retrieved optname

* @param [in] optlen: size of optval

* @return 0 on success, -1 on error

*/

int

mtcp_setsockopt(mctx_t mctx, int sock, int level, int optname, const void *optval, socklen_t optlen);

mtcp_setsockopt() can be used to manipulate TCP flows. We can configure flows using the following options for

optname:MOS_CLIBUForMOS_SVRBUFdynamically adjust the size of the TCP receivering buffer of the emulated client or server stack. The optval contains the size of the buffer that needs to be set as int, while optlen is equal to sizeof(int).

MOS_CLIOVERLAPorMOS_CLIOVERLAPdynamically determine the policy oncontent overlap (e.g., overwriting with the retransmitted payload or not) for the client-side buffer. The optval can be either MOS_OVERLAP_POLICY_FIRST (to take the first data and never overwrite the buffer) or MOS_OVERLAP_POLICY_LAST (to always update the buffer with the last data), and optlen is equal to sizeof(int).

MOS_STOP_MONdynamically stop monitoring a flow for the specific side.This option can be used only with a MOS_SOCK_MONITOR_ACTIVE socket, which is given as a parameter in callback functions for every flow. The optval contains a side variable (

MOS_SIDE_CLI,MOS_SIDE_SVR, orMOS_SIDE_BOTH), while optlen is equal to sizeof(int).

Note

If your application does not require payload inspection, you can turn

off the TCP ring buffer using MOS_CLIBUF and MOS_SVRBUF. If there is

any flow that you are not interested in any more, you can turn monitoring

off for that flow using MOS_STOP_MON.

Important

In case you have MOS_ON_PKT_IN registered on MOS_HK_SND you will not

be able to call mtcp_setsockopt() or mtcp_getsockopt() functions

on the server (MOS_SIDE_SVR) side for the TCP SYN packet. Only the client

TCP context has been created at that point in time.

4.3.5. Bytestream Payload Monitoring API¶

/**

* Peek bytestream from the flow

*

* @param [in] mctx: mTCP/mOS context

* @param [in] sock: monitoring stream socket

* @param [in] side: monitoring side

* @param [in] buf: user allocated character array

* @param [in] len: length to be copied

* @return # of bytes actually read on success, -1 on failure

*/

int

mtcp_peek(mctx_t mctx, int sock, int side, char *buf, size_t len);

It is possible to monitor bytestream through mtcp_peek() function call on a

monitoring socket. mtcp_peek() copies the data from the buffer, and

delivers it to the application. If an application registers a callback on

MOS_ON_CONN_NEW_DATA event and calls mtcp_peek() inside the callback,

it can read the available contiguous bytestream.

/**

* Peek with an offset.

*

* @param [in] mctx: mTCP/mOS context

* @param [in] sock: monitoring stream socket

* @param [in] side: monitoring side

* @param [in] buf: user allocated character array

* @param [in] count: length to be copied

* @param [in] seq_num: byte offset of the TCP bytestream

* @return # of bytes actually read on success, -1 on failure

*/

int

mtcp_ppeek(mctx_t mctx, int sock, int side, char *buf, size_t count, off_t seq_num);

In case the user requires payload data retrieved from a specific byte offset,

mtcp_ppeek() can be used. Unlike mtcp_peek(), users are expected to

specify a stream offset (seq_num) which always starts from 0 (0 internally

gets translated to initial sequence number). In other words, seq_num can be

considered as the absolute bytestream offset of the flow. If the requested

offset is out of range of the receive buffer it returns an error.

Note

The bytestream offset starts from zero (0) for every connection (i.e. the initial sequence number gets mapped to offset 0). The byte stream offset is 64-byte integer value, and we assume that it will never be wrapped around.

Important

While we maintain both sides of the stack, we only provide receiver bytestream management (i.e. the send buffer bytestream management is not implemented). A developer interested in retrieving the bytestream of the sender can access the receive buffer of the peer flow instead (e.g. the send buffer of a client is functionally equivalent to the receive buffer of the server).

4.3.6. User-level Flow Context API¶

/**

* Set per-flow user context

*

* @param [in] mctx: mTCP/mOS context

* @param [in] sock: monitoring stream socket

* @param [in] uctx: per-flow user context

*/

void

mtcp_set_uctx(mctx_t mctx, int sock, void *uctx);

/**

* Get per-flow user context

*

* @param [in] mctx: mTCP/mOS context

* @param [in] sock: monitoring stream socket

* @return per-flow user context

*/

void *

mtcp_get_uctx(mctx_t mctx, int sock);

mOS allows user to store and retrieve per-flow context. By using this API, user applications can store per-flow metadata and use it any time during the entirety of the flow’s lifetime in mOS.

Note

It is the user’s responsibility to manage memory that is allocated

for the per-flow user context. Please remember to free up any memory

that is allocated for the context when the flow is about to be

destroyed. It is useful to always register for MOS_ON_CONN_END

callback where memory can be freed.