5. mOS Code Walkthrough¶

Attention

This page is under revision. Some sections might be updated further.

We illustrate how mOS APIs can be used to program packet and flow monitoring and manipulation codes for network middleboxes. Before reading the code examples below, we suggest the readers to go through the mOS Programming API section first.

Note

Please note that we focus on explaining mOS monitoring socket APIs only here, but not mOS end socket APIs. Our mOS end socket APIs are inherited from mTCP APIs, and follow almost the same semantics of Berkeley Socket APIs. Please refer to mTCP paper for more details.

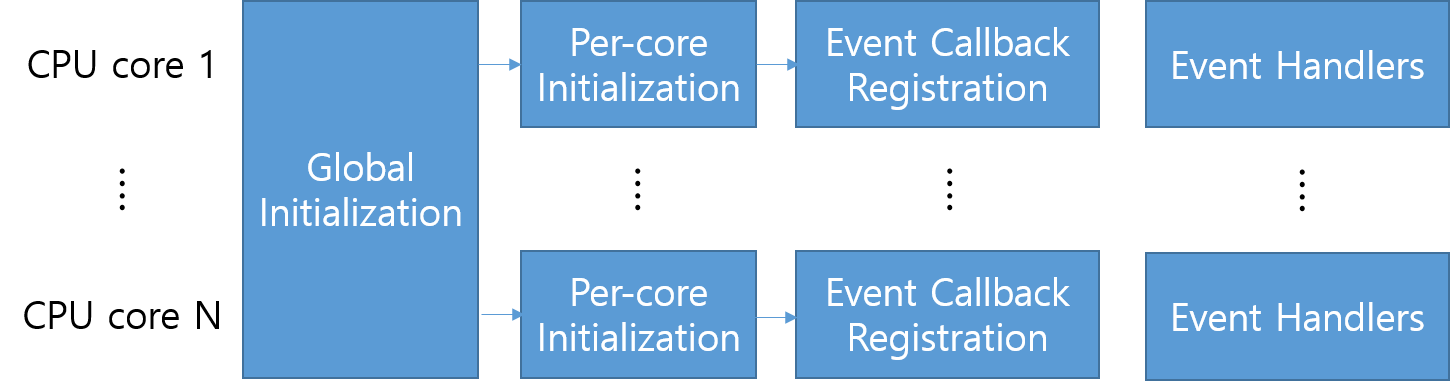

We first show how typical mOS applications look, and explain the overall workflow of mOS applications. As shown below, typical mOS applications can be broken into four sections.

Figure 5.1. mOS application overall workflow

A mOS application first should initialize mOS global internal data structures for them (Section 5.1). Next, mOS applications should spawn mOS threads, which run in parallel at each CPU core, and initialize per-thread internal data structures (Section 5.2). Once mOS threads are spawned, the mOS application can register handler (or callback) functions for flow events. The mOS threads will monitor flow behaviors and raise the events when the flow behaviors meet the event condition (Section 5.3). Once event callback function is triggered, the mOS applications can monitor the flow and perform the desired actions of the middleboxes (Section 5.4). Finally, when a mOS application is going to be shut down, it should clean up internal metadata (Section 5.5).

5.1. Global Initialization Routine¶

A mOS application should start with global initialization routine. As shown below, when mtcp_init() function is called with the path to the mOS configuration file, it loads and initializes configuration parameters for mOS networking stack. The mtcp_init() function call would return an error, if the configuration file does not exist or its configuration format is invalid. (Please look at the Configuration Parameter Tuning section for more details.)

/* path to the default mos config file */

const char *config_path = "config/mos.conf";

/* initializing the mOS networking stack */

if (mtcp_init(config_path) < 0) {

fprintf(stderr, "Failed to initialize mOS networking stack.\n");

exit(-1);

}

After the mtcp_init() function call is finished, the application can look up the configuration parameters through mtcp_getconf() function call to check whether it meets the application’s requirements. If the application wants to override any configuration parameter, mtcp_setconf() function can be called to update the parameter. The example code shown below checks the number of CPU cores to be used by mOS. If it exceeds the threshold for the number of cores given by the application (= 32), it overrides the parameter to be the maximum threshold (= 32).

/* CPU core hard limit set by application */

#define MAX_CORES 32;

/* a variable to hold mOS config information */

struct mtcp_conf g_mcfg;

/* override the number of cores to be used in mOS */

mtcp_getconf(&g_mcfg);

if (g_mcfg.num_cores > MAX_CORES)

g_mcfg.num_cores = MAX_CORES;

mtcp_setconf(&g_mcfg);

5.2. Per-core Initialization Routine¶

Once the global initialization routine is done, our next step should be mOS thread initialization on each CPU core. When mtcp_create_context() function is called with a CPU core ID, it spawns a mOS thread on the CPU core. As shown below, we can refer to the number of CPU cores parameter in the struct mtcp_conf variable (retrieved from mtcp_getconf()) in order to spawn mOS threads on every available CPU cores. On success, the mtcp_create_context() function call would return mctx_t variable, which contains per-core mOS internal metadata. We can use the mctx_t variable on any follow-up mOS API function calls.

After mOS threads are spawned on each CPU core, we next create mOS passive monitoring socket by calling mtcp_socket() function with the MOS_SOCK_MONITOR_STREAM parameter. Using the mOS passive monitoring socket, we can listen to the interested monitoring events and perform desired actions in response to the events. After a passive monitoring socket is created, we can monitor a specific set of flows described by mtcp_bind_monitor_filter() function in a Berkeley Packet Filter (BPF) format.

int i;

int sock[MAX_CORES];

mctx_t g_mctx[MAX_CORES];

for (i = 0; i < g_mcfg.num_cores; i++) {

/* run mOS threads on each CPU core */

if (!(g_mctx[i] = mtcp_create_context(i))) {

fprintf(stderr, "Failed to craete mtcp context.\n");

exit(-1);

}

/* create socket and set it as nonblocking */

if ((sock[i] = mtcp_socket(mctx, AF_INET, MOS_SOCK_MONITOR_STREAM, 0)) < 0) {

fprintf(stderr, "Failed to create monitor listening socket!\n");

exit(-1);

}

/* bind monitor with connection filter (e.g., destined to 10.0.0.3:80) */

monitor_filter ft ={0};

ft.stream_syn_filter = "dst net 10.0.0.3 and dst port 80";

if (mtcp_bind_monitor_filter(mctx, sock[i], ft) < 0) {

fprintf(stderr, "Failed to bind to the listening socket!\n");

exit(-1);

}

}

5.3. Event Callback Registration¶

When mOS monitoring socket is ready, now we have to register for monitoring events that we are interested in. We briefly explain how we can register for different types of events. For more details, please refer to the mOS Event System section.

5.3.1. Registering for Built-in Events¶

Our first example is to register a built-in event. If we want to be notified when there is any reassembly payload to read, you can register for MOS_ON_CONN_NEW_DATA event by calling mtcp_register_callback() function. Afterwards, whenever new reassembled payload is ready, the ApplyActionPerFlow callback function will be triggered.

/* register callback */

if (mtcp_register_callback(mctx, sock[i],

MOS_ON_CONN_NEW_DATA, MOS_NULL, ApplyActionPerFlow) == -1)

EXIT_WITH_ERROR("Failed to register callback function\n");

5.3.2. Registering for User-defined Events (UDEs)¶

Our next example shows how one can expand the built-in events to specify the user-customized conditions. In this example, suppose that you want to capture the initial SYN packet from the client side (The packets where SYN flag is solely set, but not the ACK flag.).

- You should first create a filter function like CatchInitSYN which retrieves packet information using mtcp_getlastpkt() function and returns true only when it contains SYN flag, but without ACK flag.

- Afterwards, you should define a UDE called initSYNEvent using mtcp_define_event() to filter initial SYN events from MOS_ON_PKT_IN with the CatchInitSYN filter function. The third argument of mtcp_define_event() is filter_arg_t type, which can be used to deliver an additional parameter to its filter function.

- By registering for the UDE initSYNEvent using mtcp_register_callback(), the callback function ApplyActionPerFlow will be triggered only for the initial SYN packets, but not all the packets. Since it registered callback on the MOS_HK_SND hook point, its callback function is triggered in the point of view of a sender of the SYN packet (= a TCP client).

/* filter function for the initial SYN packet */

static bool

CatchInitSYN(mctx_t mctx, int sockid,

int side, uint64_t events, filter_arg_t *arg)

{

struct pkt_info p;

if (mtcp_getlastpkt(mctx, sockid, side, &p) < 0)

EXIT_WITH_ERROR("Failed to get packet context!!!\n");

return (p.tcph->syn && !p.tcph->ack);

}

/* event for the initial SYN packet */

event_t initSYNEvent = mtcp_define_event(MOS_ON_PKT_IN, CatchInitSYN, NULL);

if (initSYNEvent == MOS_NULL_EVENT) {

fprintf(stderr, "mtcp_define_event() failed!");

exit(-1);

}

/* register callback */

if (mtcp_register_callback(ctx->mctx, ctx->mon_listener,

initSYNEvent, MOS_HK_SND, ApplyActionPerFlow) == -1)

EXIT_WITH_ERROR("Failed to register callback func!\n");

5.3.3. Registering for Timer Events¶

In case you want a callback function to be triggered periodically, you can use mtcp_settimer() function. For example, if you want to dump a firewall fule table for every 1 second, register DumpFWRuleTable function onto a timer with mtcp_settimer() function. After 1 second, the DumpFWRuleTable function will be triggered. Inside the DumpFWRuleTable function, you should call another mtcp_settimer() function to be triggered after the next 1 second.

/* for every 1 second. */

struct timeval tv_1sec = {

.tv_sec = 1,

.tv_usec = 0

};

/* dump a filewall rule table (CPU 0 is in charge of printing stats) */

if (mctx->cpu == 0 && mtcp_settimer(mctx, sock[mctx->cpu], &tv_1sec, DumpFWRuleTable))

EXIT_WITH_ERROR("Failed to register timer callback func!\n");

5.4. Packet/Flow Processing Logic in Event Handlers¶

Whenever a monitoring event interested by a mOS application occurs, a corresponding callback function registered via mtcp_register_callback() will be triggered. Inside the callback function, the mOS application can call any of the following mOS API functions to monitor and perform an action on the packet or flow that triggered the event:

In the following subsections, we describe how mOS monitoring API functions can be used for monitoring and processing incoming packets and flows that meet the application’s event conditions.

5.4.1. Monitoring Packet Metadata and Payload¶

Inside a callback function, if you want to know which packet triggered the given event, you can use mtcp_getlastpkt() function. mtcp_getlastpkt() fetches a copy of the last Ethernet frame for a given flow observed by the stack. This function is read-only, so although you update the packet metadata (the 4th struct pkt_info parameter), it never updates the outgoing packet that will be forwarded.

mtcp_getlastpkt() provides in-depth packet information from Ethernet level (layer 2) to TCP level (layer 4), including their header fields. You can refer to mtcp_getlastpkt() man page to understand which information you can retrieve by calling the function.

For example, you can use mtcp_getlastpkt() to retrieve source and destination host addresses of the packet (5-tuple) as follows (e.g., an L3/L4 firewall or ACL that looks up 5-tuple firewall rules):

/* retrieve the packet information */

struct pkt_info p;

if (mtcp_getlastpkt(mctx, msock, side, &p) < 0)

EXIT_WITH_ERROR("Failed to get packet context!\n");

/* look up the firewall rules with 5-tuples */

action = FWRuleLookup(p.iph->saddr, p.iph->daddr,

p.tcph->source, p.tcph->dest);

If you want to print the length values of each protocol header, you can retrieve them as follows:

/* retrieve the packet information */

struct pkt_info p;

if (mtcp_getlastpkt(mctx, msock, side, &p) < 0)

EXIT_WITH_ERROR("Failed to get packet context!\n");

/* print the length of each protocol header */

printf("Ethernet header length: %u bytes\n", p.eth_len - p.ip_len);

printf("IP header length: %u bytes\n", p.ip_len - p.tcph->doff * 4 - p.payloadlen);

printf("TCP header length: %u bytes\n", p.tcph->doff * 4);

printf("TCP payload length: %u bytes\n", p.payloadlen);

When you want to know average TCP goodput of a given flow, you can implement it simply using two callback functions: one for monitoring connection start time, another one for calculating TCP goodput for every packet.

static void

OnConnStart(mctx_t mctx, int msock, int side, event_t ev, struct filter_arg *f)

{

uint32_t* ts;

if ((ts = malloc(sizeof(uint32_t))) == NULL)

EXIT_WITH_ERROR("malloc() error\n");

/* retrieve and save connection start clock time (in milliseconds) */

if (((*ts) = mtcp_cb_get_ts(mctx)) == 0)

EXIT_WITH_ERROR("mtcp_cb_get_ts() error\n");

mtcp_set_uctx(mctx, msock, (void *) ts);

}

static void

OnPacketIn(mctx_t mctx, int msock, int side, event_t ev, struct filter_arg *f)

{

double bw, time_elapsed;

uint32_t ts_conn_start;

/* retrieve the packet information */

struct pkt_info p;

if (mtcp_getlastpkt(mctx, msock, side, &p) < 0)

EXIT_WITH_ERROR("Failed to get packet context!\n");

/* calculate the time elapsed since connection start in seconds */

ts_conn_start = *((uint32_t *)mtcp_get_uctx(mctx, msock));

time_elapsed = (p.cur_ts - ts_conn_start) / 1000.0;

/* derive the TCP goodput in Gbps */

bw = ((double) p.offset * 8 / 1000.0 / 1000.0 / 1000.0) / time_elapsed;

printf("TCP goodput: %.2f (Gbps)\n");

}

...

/* register callback functions (see Section 5.3) */

if (mtcp_register_callback(mctx, msock, MOS_ON_CONN_START, MOS_NULL, OnConnStart) == -1)

EXIT_WITH_ERROR("Failed to register callback function\n");

if (mtcp_register_callback(mctx, msock, MOS_ON_PKT_IN, MOS_NULL, OnPacketIn) == -1)

EXIT_WITH_ERROR("Failed to register callback function\n");

5.4.2. Modifying or Dropping a Packet¶

When a packet comes in from the network, mOS networking stack provides a way to modify the packet before being forwarded, or to drop the packet.

First, if you want to modify a packet, you can use mtcp_setlastpkt() with MOS_OVERWRITE flag in option parameter. Using this function, you can overwrite any header field of the packet (either Ethernet, IP, or TCP), or TCP payload. The following example shows how mOS application can modify destination address field in IP header and destination port number field in TCP header (e.g., NAT). Please note that you should update checksum fields in IP header and TCP header, when you update something inside TCP/IP packet header (using MOS_UPDATE_IP_CHKSUM and MOS_UPDATE_TCP_CHKSUM).

/* byte offset of destination address and port field in each header */

#define OFFSET_DST_IP 16

#define OFFSET_DST_PORT 2

/* update destination host address */

if (mtcp_setlastpkt(mctx, sock, 0, OFFSET_DST_IP,

(uint8_t *)&ip, sizeof(in_addr_t),

MOS_IP_HDR | MOS_OVERWRITE) < 0) {

EXIT_WITH_ERROR("mtcp_setlastpkt() failed\n");

return -1;

}

/* update destination port number (plus, update checksum) */

if (mtcp_setlastpkt(mctx, sock, 0, OFFSET_DST_PORT,

(uint8_t *)&port, sizeof(in_port_t),

MOS_TCP_HDR | MOS_OVERWRITE

| MOS_UPDATE_IP_CHKSUM | MOS_UPDATE_TCP_CHKSUM) < 0) {

EXIT_WITH_ERROR("mtcp_setlastpkt() failed\n");

return -1;

}

Second, if you want to drop a packet, you can use mtcp_setlastpkt() with MOS_DROP flag in the option parameter. Please note that this flag makes the function ignore any other option flags.

if (mtcp_setlastpkt(mctx, sock, side, 0, NULL, 0, MOS_DROP) < 0) {

EXIT_WITH_ERROR("mtcp_setlastpkt() failed\n");

return -1;

}

5.4.3. Generating and Sending a Packet¶

When you implement a middlebox, in some cases, you might need to send a new (self-constructed) packet, rather than just modifying or forwarding the incoming packet. For those applications, we can use mtcp_sendpkt() to send a new packet and insert it to the network.

In the following example, for each incoming packet, we create a new packet copy, and send the packet to the logger. It first captures the incoming packet using mtcp_getlastpkt(), rewrite the destination address of the packet information (struct pkt_info), and send it to the network. As a result, when you run the following code, for every incoming packet, a duplicated packet (whose fields are all the same except destination address and port) is forwarded to the logger (placed at 10.0.0.10:3333 in this example).

/* capture the incoming packet */

struct pkt_info p;

if (mtcp_getlastpkt(mctx, msock, side, &p) < 0)

EXIT_WITH_ERROR("Failed to get packet context!\n");

/* replace destination address and port with that of the logger */

char *loggerIP = "10.0.0.3";

int loggerPort = 3333;

p.iph->daddr = inet_addr(loggerIP);

p.tcph->dest = htons(loggerPort);

/* send a separate packet copy to the logger */

if (mtcp_sendpkt(mctx, msock, &p) < 0)

TRACE_ERROR_EXIT("mtcp_sendpkt() error\n");

Note

If you want to talk to a TCP end host through a TCP connection, it would be better to create a TCP connection using our mTCP API (See mTCP networking API section in our man page.).

Attention

For now, mtcp_sendpkt() function only allows sending a TCP packet. We are planning to support sending a packet of other protocols as well in the near future (e.g., sending a UDP packet).

5.4.4. Monitoring Reassembled TCP Payload¶

For middlebox applications that need to monitor TCP payload (e.g., IDS finding for known attack patterns from traffic), mOS provides TCP payload reassembly feature. By default, mOS internally maintains its own payload reassembly buffer, which handles a number of cumbersome corner cases, including out-of-order packet arrival, overlapping payload arrival, and buffer overrun on its own (see Section 4.3 in mOS paper for more details).

mOS exposes reconstructed TCP payload in the reassembly buffer via mtcp_peek(). In order to monitor reconstructed payload of a TCP flow, all you need to do is to call mtcp_peek() function on each MOS_ON_CONN_NEW_DATA event meaning that new reassembled payload is available. For example, our mOS ported version of Snort IDS performs payload inspection as follows (Note that we omit some details here.):

/* triggered on TCP payload arrival, it reads the payload

and forwards it to snort detect engine */

static void

callback_flush_data(mctx_t mctx, int msock, int side,

uint64_t events, struct filter_arg *arg)

{

...

/* retrieve reassembled payload to application-level buffer */

ret = mtcp_peek(mctx, msock, side,

PAYLOAD_OFFSET(buf + win_copy_len),

MIN(FLUSH_SIZE, cd->svr_bytes_rcvd - cd->svr_bytes_flshd));

...

/* now jump to the snort detect engine */

DispatchRebuiltPacket(cd, &hdr,

(const unsigned char *)ETH_OFFSET(buf));

...

}

...

/* register a callback function for TCP payload arrival event */

if (mtcp_register_callback(mctx, mlisten,

MOS_ON_CONN_NEW_DATA

MOS_NULL,

callback_flush_data) == -1)

EXIT_WITH_ERROR("Failed to register callback func!\n");

Please note that when new packets continue to arrive while the receive buffer becomes full, it would result in reassembly buffer outrun, and MOS_ON_ERROR event will be triggered. In order to handle such corner cases, you need to deal with MOS_ON_ERROR event properly either by increasing the reassembly buffer size with mtcp_setsockopt() or consume the payload in the reassembly buffer with mtcp_peek().

/* triggered on MOS_ON_ERROR (e.g., receive buffer is full) */

static void

callback_on_error(mctx_t mctx, int msock, int side,

uint64_t events, struct filter_arg *arg)

{

/* print log message on error event */

EXIT_WITH_ERROR("[mOS ERROR EVENT] receive buffer might be full.\n");

}

/* register a callback function for TCP payload arrival event */

if (mtcp_register_callback(mctx, mlisten,

MOS_ON_ERROR,

MOS_NULL,

callback_on_error) == -1)

EXIT_WITH_ERROR("Failed to register callback func!\n");

If the buffer outrun is not handled properly, mOS overwrites the reassembly buffer with the newer payload. In this case, to notify the user, the next mtcp_peek() call will return the negative value of (the number of bytes overwritten onto the buffer), and errno is set to ENODATA.

Attention

Currently, mOS does not provide the root cause of MOS_ON_ERROR event along with its callback function. We are going to support this in the near future.

5.4.5. Monitoring Fragmented TCP Segments¶

Besides the reassembled payload, mOS exposes fragmented TCP segments (or non-contiguous TCP payload) that arrived in out-of-order manner.

mOS application can monitor fragmented payload via mtcp_ppeek(). In addition, mOS provides metadata information of the reassembly buffer (e.g., buffer offset or corresponding sequence number) with mtcp_getsockopt(). We next demonstrate an example that shows how mOS applications can use those functions. In this example, we print the fragmented payloads for a given TCP flow along with the offset:

int read_len;

struct tcp_ring_fragment frags[MAX_FRAG_NUM];

socklen_t nfrags = MAX_FRAG_NUM;

/* retrieve offset metadata on fragmented payloads */

mtcp_getsockopt(mctx, msock, SOL_MONSOCKET, (side == MOS_SIDE_CLI)

? MOS_FRAGINFO_CLIBUF : MOS_FRAGINFO_SVRBUF,

frags, &nfrags);

/* for each fragment, print the offset information and payload */

for (i = 0; i < nfrags; i++) {

/* print offset and length of each segment */

printf("[%d] offset = %u / len = %u / ", i, frags[i].offset, frags[i].len);

/* peek the payload of each TCP fragment */

read_len = mtcp_ppeek(mctx, msock, side, buf, frags[i].offset, frags[i].len);

if (read_len < 0)

EXIT_WITH_ERROR("mtcp_ppeek() error\n");

if (read_len > 0)

printf("payload = [%s]\n", buf);

}

5.4.6. Monitoring TCP Connection State¶

mOS networking stack monitors the bi-directional packets of each TCP flow, and keeps emulating their states. In the following example, it explains how one can monitor TCP state transition throughout each flow’s lifecycle. Whenever there is any TCP state transition, one can detect the state change by listening to MOS_ON_TCP_STATE_CHANGE event. Inside the callback function for that event, we can use mtcp_getsockopt() with MOS_TCP_STATE_CLI or MOS_TCP_STATE_SVR to monitor their TCP connection states.

/* convert state value (integer) to string (char array) */

const char *

strstate(int state)

{

switch (state) {

#define CASE(s) case TCP_##s: return #s

CASE(CLOSED);

CASE(LISTEN);

CASE(SYN_SENT);

CASE(SYN_RCVD);

CASE(ESTABLISHED);

CASE(FIN_WAIT_1);

CASE(FIN_WAIT_2);

CASE(CLOSE_WAIT);

CASE(CLOSING);

CASE(LAST_ACK);

CASE(TIME_WAIT);

default:

return "-";

}

}

/* triggered on MOS_ON_TCP_STATE_CHANGE */

static void

callback_on_state_change(mctx_t mctx, int msock, int side,

uint64_t events, struct filter_arg *arg)

{

int state;

socklen_t intlen = sizeof(int);

/* retrieve offset metadata on fragmented payloads */

mtcp_getsockopt(mctx, msock, SOL_MONSOCKET, (side == MOS_SIDE_CLI)

? MOS_TCP_STATE_CLI : MOS_TCP_STATE_SVR,

&state, &intlen);

if (side == MOS_SIDE_CLI)

printf("client-side state changed to %s\n", strstate(state));

else

printf("server-side state changed to %s\n", strstate(state));

}

/* register a callback function which tracks TCP state change on sender side */

if (mtcp_register_callback(mctx, mlisten,

MOS_ON_TCP_STATE_CHANGE,

MOS_HK_SND,

callback_on_state_change) == -1)

EXIT_WITH_ERROR("Failed to register callback func!\n");

5.4.7. Querying End-point Host Address¶

In BSD socket API, getpeername() returns the address of the peer connected to the given socket. Our mtcp_getpeername() function works similarly, but differs in some respects. In the middleboxes’ point of view, it has two end-host peers on each end. Therefore, mtcp_getpeername() includes one extra parameter (the 5th parameter) side that should be set to specify on which side this function is interested in (either MOS_SIDE_CLI or MOS_SIDE_SVR).

struct sockaddr_in addr;

socklen_t len = sizeof(addr);

if (mtcp_getpeername(mctx, sock, (struct sockaddr *)&addr, &len,

MOS_SIDE_CLI) < 0) {

TRACE_ERROR("mtcp_getpeer() failed for sock=%d\n", sock);

return;

}

printf("[client] %s:%s", inet_ntoa(addr.sin_addr), ntohs(addr.sin_port));

If you want to query addresses of both server and client, you can set the side argument as MOS_SIDE_BOTH, and provide 2 * sizeof(struct sockaddr) bytes of address buffer as shown below. On success, mtcp_getpeername() would return an array of the socket addresses. You can refer to both the client-side address as addr[MOS_SIDE_CLI], and the server-side address as addr[MOS_SIDE_CLI].

struct sockaddr_in addr[2];

socklen_t len = sizeof(addr) * 2;

if (mtcp_getpeername(mctx, sock, (struct sockaddr *)&addr, &len,

MOS_SIDE_BOTH) < 0) {

TRACE_ERROR("mtcp_getpeer() failed for sock=%d\n", sock);

return;

}

printf("[client] %s:%s", inet_ntoa(addr[MOS_SIDE_CLI].sin_addr),

ntohs(addr[MOS_SIDE_CLI].sin_port));

printf("[server] %s:%s", inet_ntoa(addr[MOS_SIDE_SVR].sin_addr),

ntohs(addr[MOS_SIDE_SVR].sin_port));

5.4.8. Setting Monitoring Policy¶

We next explain how one can set monitoring policy in mOS monitoring stack.

First, mOS provides fine-grained control over TCP flow reassembly. For middleboxes which handle a large number of concurrent flows with limited resources, mOS can adapt its resource consumption (including memory footprint/bandwidth and computation resource), to the computing needs (see Section 4.4 in mOS paper for more details). For example, any applications which do not care reassembled payload can disable flow reassembly logic by setting their buffer size to zero on each side using mtcp_setsockopt() with MOS_CLIBUF or MOS_SVRBUF.

/* disable socket buffer */

int optval = 0;

if (mtcp_setsockopt(mctx, sock, SOL_MONSOCKET, MOS_CLIBUF,

&optval, sizeof(optval)) == -1) {

fprintf(stderr, "Could not disable CLIBUF!\n");

}

if (mtcp_setsockopt(mctx, sock, SOL_MONSOCKET, MOS_SVRBUF,

&optval, sizeof(optval)) == -1) {

fprintf(stderr, "Could not disable SVRBUF!\n");

}

Second, whenever (either partially or fully) retransmitted packets arrive, the previous content and the new content can differ from each other. When this happens, by default, mOS monitoring stack maintains the previous content, and never merges the new content to its payload reassembly buffer. Since this update policy differs by the end-host operating systems, mOS also provides another option to overwrite the previous content in the payload reassembly buffer with newly arrived payload as shown below (see Section 4.3 in mOS paper for more details).

/* */

int optval = MOS_OVERLAP_POLICY_LAST;

if (mtcp_setsockopt(mctx, sock, SOL_MONSOCKET, MOS_CLIOVERLAP,

&optval, sizeof(optval)) == -1) {

fprintf(stderr, "Could not disable CLIBUF!\n");

}

if (mtcp_setsockopt(mctx, sock, SOL_MONSOCKET, MOS_SVROVERLAP,

&optval, sizeof(optval)) == -1) {

fprintf(stderr, "Could not disable SVRBUF!\n");

}

Note

Please note that mtcp_setsockopt() with those options (MOS_CLIBUF, MOS_SVRBUF, MOS_CLIOVERLAP, or MOS_SVROVERLAP) can be called either globally (e.g., mOS passive monitoring sockets created via mtcp_socket() with MOS_SOCK_MONITOR_STREAM) or per-flow basis (e.g., mOS active monitoring sockets passed via sock parameter of each callback).

5.4.9. Disabling Packet/Flow Monitoring¶

In some applications, there can be a case where it gives up monitoring some certain flows. In this case, we can call mtcp_setsockopt() with MOS_STOP_MON option. For example, a mOS application can totally disable monitoring on a TCP flow by using MOS_SIDE_BOTH parameter.

int optval = MOS_SIDE_BOTH;

if (mtcp_setsockopt(mctx, msock, SOL_MONSOCKET,

MOS_STOP_MON, &optval, sizeof(optval)) < 0)

EXIT_WITH_ERROR("Failed to stop monitoring conn with sockid: %d\n",

msock);

If there is any application which wants to disable monitoring selectively on a certain side, we can call mtcp_setsockopt() with MOS_STOP_MON option using MOS_SIDE_CLI or MOS_SIDE_SVR parameter.

int optval = MOS_SIDE_CLI;

if (mtcp_setsockopt(mctx, msock, SOL_MONSOCKET,

MOS_STOP_MON, &optval, sizeof(optval)) < 0)

EXIT_WITH_ERROR("Failed to stop monitoring conn with sockid: %d\n",

msock);

Note

Please note that mtcp_setsockopt() with MOS_STOP_MON can be called only in a per-flow basis (e.g., mOS active monitoring sockets passed via sock parameter of each callback).

5.4.10. Saving and Loading User-level Metadata¶

We found there are several mOS applications which maintains per-flow metadata at user level. Those applications usually keep per-flow statistics or contexts that are not provided by mOS networking stack. In this case, mtcp_get_uctx() and mtcp_set_uctx() can be used as shown below. Please note that it is the developer’s responsibility to allocate and free memory for user-level metadata.

static void

OnConnStart(mctx_t mctx, int msock, int side, event_t ev, struct filter_arg *f)

{

uint32_t* pkt_cnt;

if ((pkt_cnt = malloc(sizeof(uint32_t))) == NULL)

EXIT_WITH_ERROR("malloc() error\n");

(*pkt_cnt) = 0;

mtcp_set_uctx(mctx, msock, (void *) pkt_cnt);

}

static void

OnPacketIn(mctx_t mctx, int msock, int side, event_t ev, struct filter_arg *f)

{

uint32_t* pkt_cnt = (uint32_t *)mtcp_get_uctx(mctx, msock);

(*pkt_cnt)++;

}

static void

OnConnEnd(mctx_t mctx, int msock, int side, event_t ev, struct filter_arg *f)

{

uint32_t* pkt_cnt = (uint32_t *)mtcp_get_uctx(mctx, msock);

printf("[sock %d] total packets = %u\n", msock, (*pkt_cnt));

free(pkt_cnt);

}

5.5. Application Destroy Routine¶

After the application thread registers callback functions for its interested events, the mOS threads on each CPU core will perform flow monitoring and trigger the callback functions when the event conditions are met. Meanwhile, the application thread should wait until all the mOS threads finishes (or catches any interrupt signal by the user). The application thread can call mtcp_app_join() function to wait until all the mOS thread are terminated as shown below.

When a mOS thread finishes, we should close the passive monitoring socket by calling mtcp_close() function. After that, we must clean up the per-core metadata using mtcp_destroy_context() function. After all the per-core destroy routine finishes, we should call mtcp_destroy() function to cleanup all the global mOS networking stack parameters, before exiting the program.

for (i = 0; i < g_mcfg.num_cores; i++) {

/* wait for the TCP thread to finish */

mtcp_app_join(mctx[i]);

/* close the monitoring socket */

mtcp_close(mctx[i], sock[i]);

/* tear down */

mtcp_destroy_context(mctx[i]);

}

mtcp_destroy();